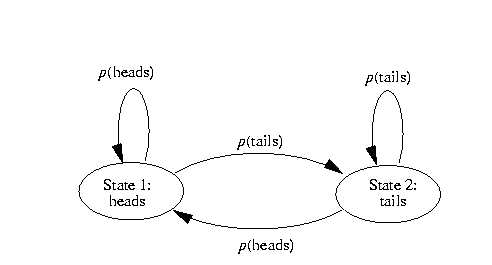

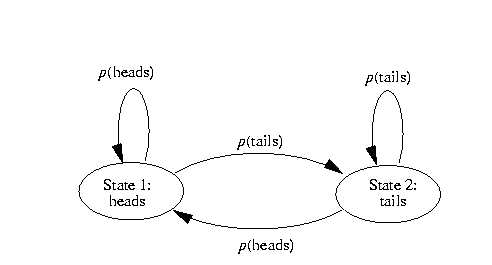

Figure 7.2. Observable Markov model of tossing a biased coin

(After Rabiner and Juang 1993)

Assume the following scenario. You are in a room with a curtain through which you cannot see what is happening. On the other side of the curtain is another person who is performing a coin-tossing experiment, using one or more coins. The coins may be biased. The person will not tell you which coin he selects at any time; he will only tell you the result of each coin flip, by calling "heads" or "tails" through the curtain. Thus, a sequence of hidden coin-tossing experiments is performed, with your observation sequence consisting of a series of heads and tails. A typical observation sequence would be

O = (o1 o2 o3

o4

o5 ... on )

= (H H T T T H T ... H)

where H stands for heads and T stands for tails.

Given the above scenario, the question is: how do we build a Markov model to explain (model) the observed sequence of heads and tails?

The first problem we face is deciding what the states in the model correspond to, and then deciding how many states should be in the model. One possible choice would be to assume that only a single biased coin was being tossed. In this case we could model the situation with a two-state model in which each state corresponds to the outcome of the previous toss (i.e. heads or tails). This model is depicted in figure 7.2. In this case, the Markov model is observable, and the only issue for complete specification of the model would be to decide on the best value for the single parameter of the model (i.e. the probability of, say, heads).

Figure 7.2. Observable Markov model of tossing a biased coin