University of Pennsylvania

|

University

of Oxford Phonetics Laboratory

|

|

Linguistic Data

Consortium University of Pennsylvania |

American

English sound

files at the LDC.

The transcribed American English speech in the LDC's current catalog

includes about 2,240 hours of two-party telephone conversations, 260

hours of task-oriented dialogs, 100 hours of group meetings, 1,255

hours of broadcast news, 30 hours of voice mail, and 300 hours of read

or prompted speech. As-yet unpublished LDC data includes about 1,000

hours of broadcast conversations (such as talk shows), and about 5,000

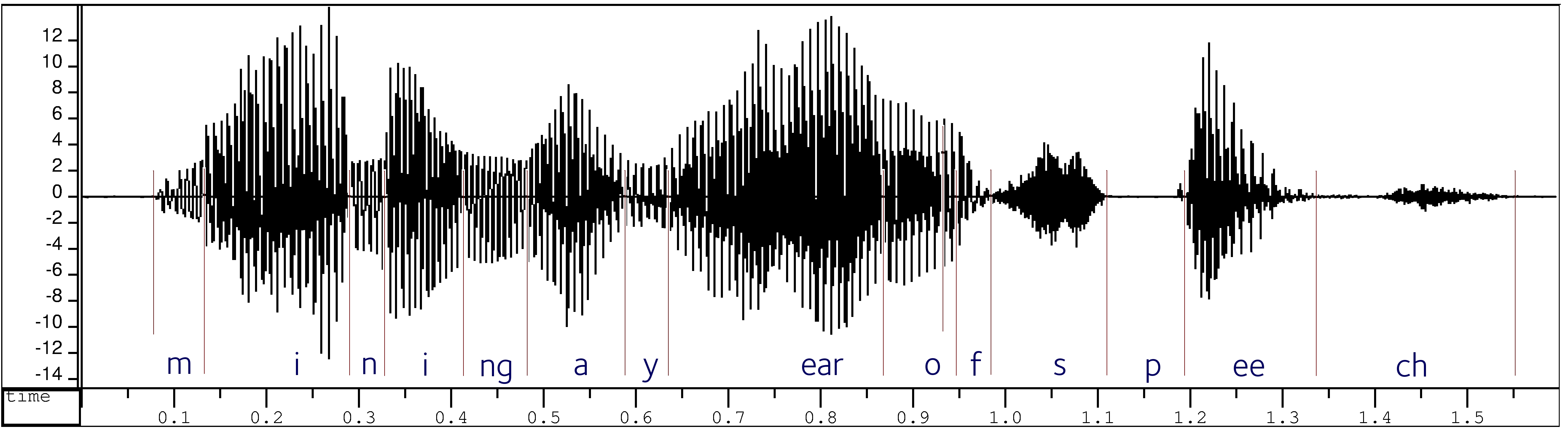

hours of U.S. Supreme Court oral arguments. The process of aligning all

of this at the word and phonetic-segment level is under way, using the

automatic forced-alignment techniques developed at Penn's Phonetics

Lab. We are in the

process of adding a large sample of political speeches and debates, and

a collection of open-access audiobooks from librivox.org.

British English sound files in the BNC. In a pilot project we digitized 3% of the BNC recordings to determine that the audio quality is sufficient for the Penn Phonetics Lab Forced Aligner and to quantify the computational requirements. As part of Oxford University and the BL's institutional contributions to this project, the British Library Sound Archive (where the tapes are deposited) shall have digitized at least 23% by the start of the project, with the likelihood that a further 57% will be digitized using funding obtained from other sources. We already have access to the LDC and BNC data, rights to exploit it for our research, and rights to license it for research use. As licensing and other constraints allow, we aim to support scholars from other fields by including oral history recordings, newsreels, and other sources.

As an outcome of the

project, we will create a new, Extended release of the BNC, in

two parts: the existing text transcriptions, plus an additional

structure of timing information that this project will produce. The

text and timing information will be released in XML form by the BNC

Consortium. We expect to make it available under the same license and

via the same arrangements as the current text version of the BNC (

The British Library

cannot publish the unprocessed, original audio, because the volunteers

who collected the recordings were promised anonymity. Personal and

place names are mentioned in the audio files, so these need to be deleted. We shall therefore anonymize

the audio files and return them to the British Library, who will then

prepare them for release and serve them via their Archival Sound

Recordings project (

This dataset is several hundred times larger than the largest datasets previously used in research of this type; and just as important, it is much more diverse. Both the size and the diversity will make new kinds of research possible. But the most important thing of all is that the framework and tools that we are developing can easily be applied to any additional material for which both recordings and (standard orthographic) transcriptions exist. As a result, others will be able to apply our methods to new datasets of interest to them, including (for example) sociolinguistic interviews, oral histories, courtroom recordings, political speeches and debates, doctor-patient interactions, and so on.